Batik’s Pattern Recognition and Generation: Review and Challenges

on

JURNAL ILMIAH MERPATI VOL. 10, NO. 2 AUGUST 2022

p-ISSN: 2252-3006

e-ISSN: 2685-2411

Batik’s Pattern Recognition and Generation: Review and Challenges

Dewa Made Sri Arsaa1, Anak Agung Ngurah Hary Susilaa2, Desak Ayu Sista Dewib3, Ni Putu Sutramiania4, I Wayan Agus Surya Darmac5

aDepartment of Information Technology, Universitas Udayana, Indonesia bDepartment of Industrial Engineering, Universitas Udayana, Indonesia c Informatics Engineering, STMIK STIKOM Indonesia, Indonesia e-mail: 1dewamsa@unud.ac.id, 2harysusila@unud.ac.id, 3sistadasd@unud.ac.id, 4sutramiani@unud.ac.id, 5surya@stiki-indonesia.ac.id

Abstrak

Batik adalah salah satu warisan budaya yang diakui oleh UNESCO. Sistem cerdas datang sebagai salah satu solusi untuk mendukung program pelestarian dari warisan budaya ini. Studi ini mengeksplorasi capaian saat ini pada aplikasi kecerdasan tiruan pada gambar batik. Penelitian ini memberikan investigasi sistematik dan mempresentasikan progres saat ini dan topik-topik hangat pada bidang pengenalan dan pembangkitan untuk gambar Batik. Penelitian ini juga merangkum beberapa data Batik beserta state-of-the-art dari data tersebut dan memproyeksikan beberapa topik yang dapat dilakukan pada bagian diskusi.

Kata kunci: kecerdasan tiruan, pengenalan batik, pembangkitan batik, pengolahan citra

Abstract

Batik is one of cultural heritage acknowledged by UNESCO. Intelligence system comes as one of solution to take parts on preservation programs of this heritage. This study explores the current state of the art in application of artificial intelligence on Batik images. This research provides a systematic investigation and present the current progress and hot issues in recognition and generation area for Batik images. Furthermore, this research also presents several Batik data sets and their state of the art and projecting several future works in the discussion.

Keywords: artificial intelligence, batik recognition, batik generation, image processing

Batik is an Indonesian cultural heritage which philosophically possesses visceral meaning and high value. United Nations Educational Scientific and Culture Organization (UNESCO) has also acknowledged it as one of the worlds cultural heritages [1]. Batik can be found in various Indonesia’s regions, but the pattern might be different. The Batik’s pattern might be different in various Indonesia’s regions because of each pattern representing tradition and culture in such a region.

Generally, Batik has two types of patterns [2]. The first pattern is geometric. The examples of this pattern are Ceplok, Banji, Parang, Kawung, and Mega Mendung. The second pattern is a non-geometric pattern like Semen, Cuwiri, Lunglungan, and Buketan. The craftsmen usually arrange such kind of pattern frequently on the material [3]. The variety of batik patterns becomes challenging for the Indonesian government to preserve this cultural heritage. However, the details of information are harder to collect because only particular people learn it.

The information communication technologies bring new options to provide and share cultural heritage, and these options are suitable as preservation tools. Cantoni et al. [4] mention five areas where the information communication technologies useful in cultural heritage, such as easy access, better experience, connecting locals and visitor, dis-intermediate to improve business activities, and support the training and education activities. To overcome the preservation issue, particularly Batik, worldwide researchers in computer sciences try to address it by proposing an automatic system that provides details of specific batik patterns.

Beside, various research also have been tried to create a various kind of system and using artificial intelligence [5]–[7]

In this study, we summarise previous approaches which have been proposed to analyse batik pattern. We collect var- ious literature from 2015 to present from various sources. Then, we arrange them into several groups by approach similarity. In advance, this paper has several contributions which can be divided into four parts as follows.

-

1. Categorise all approach which has been done to analyse the pattern of Batik.

-

2. Summarise all batik dataset which can be accessed publicly. We also provide the challenge for each dataset and current state of the art.

-

3. We are presenting the current benchmark method for each Batik’s dataset.

-

4. Present future research direction related to Batik

The rest of this study is written as follows. Section II analyses various batik pattern recognition approach. Section III presents the batik pattern synthesis method. Section IV provides several batik datasets which publicly available and its state of the art. Section V concludes our findings with future directions.

This review is following the systematic review guidelines used in [8]. Firstly, we gather the literatures from various sources such as IEEE Explorer, ScienceDirect, and ACM Digital Library. Since the literatures are limited due to a specific object, we further explored secondary indexer through Google Scholar and Researchgate. Then the collected literatures are filtered by their quality in terms of their quality. Secondly, we identified the problem and mapping the proposed method for each refined literature. Thirdly, we analyze the open problem and providing the future direction for the future research.

After collecting and refining the gathered literature, we got 22 papers. Then, we create a state-of-the-art matrix and found that there are two main tasks related to Batik, such as recognizing the Batik’s motif and synthesizing Batik’s pattern, which will be further explained in section 3 and 4.

Table 1 present several research which is developed based on the image retrievalbased approach. Majorly, the texture feature-based was preferred here. Fahmi et al. [9] combining four methods that produce a high data dimension, reducing and filtering those features using sequential forward floating selection [10] or principal component analysis [11] through a performance comparison. They report that principal component analysis has better precision than sequential forward floating selection but slower in the retrieval execution time. However, the difference is not significant. Similar approaches use in [12], [13] . Nurhaida et al. [12] compare several feature combinations experimentally, like Gabor features, log-Gabor features, grey level co-occurrence matrices, and local binary pattern features. The results show that all combination provide high precisions but low recalls. Moreover, Prasetyo et al. [13] combine ordered dither block truncation coding with a particle swarm optimization to find the optimal value for similarity weighting constants to enhance the retrieval performance. In ordered dither block truncation coding, they extract colour feature and texture feature.

The method for matching the test image with the database also affects retrieval performance. Prasetyo et al. [13] demonstrate the trend for L1, L2, x2, and Canberra distance in precision and recall value. From the four scenarios, the canberra distance outperformed other methods in three scenarios where the block sizes are 4×4, 8×8, and 32×32. In block size 16 × 16, the canberra distance is in the second position. In advance, Prasetyo et al. [13] optimize the canberra distance performance using particle swarm optimization. The particle swarm optimization is used to optimize the value of «1, «2, and «3. The particle swarm optimization is able to improve the performance of canberra distance 0.5% of accuracy.

Table 1 Content based image retrieval-based approach

|

Authors |

Feature extraction method |

Retrieval method |

|

[9] |

Features: Gabor filter, log-gabor filter, GLCM, LBP. Feature selection is done by using SFFS and PCA |

euclidean distance |

|

[33] |

Scaled invariant feature transform with hough transform |

euclidean distance |

|

[12] |

Feature fusion (Gabor, Log-gabor, GLCM, LBP) |

euclidean distance |

|

[13] |

Ordered dither block tuncation coding (ODBTC) |

L1, L2, X2, and modified canberra distance |

Table 2 Batik’s pattern classification using various feature extractor and classification methods

|

Authors |

Feature extraction method |

Classification |

|

[2] |

Various pretrained-convolutional neural networks |

Softmax |

|

[14] |

Fast Discrete Curvelet Transform + hue saturation value |

K-Nearest Neighbour |

|

[15] |

Gabor filter, log Gabor filter, gray level co-occurence matrix, and LBP followed by a principal component analysis |

deep neural network |

|

[16] |

Gray level co-occurence matrix, deep belief network, convolutional neural network |

SVM, Softmax, Fully-connected layers+Softmax |

|

[20] |

Pretrained VGG-16 |

Random forest and SVM |

|

[34] |

Gray level co-occurence matrix + statistical color channel RGB |

backprogagation neural network |

|

[35] |

Gray level co-occurence matrix + shape features |

three layers neural network |

|

[36] |

Gray level co-occurence matrix and shape features + feature selection using information gain |

three layers neural network |

|

[37] |

Gray level co-occurence matrix + SURF |

K-Nearest Neighbour |

|

[38] |

IncRes |

Fully-connected layers + Softmax |

|

[39] |

Geometric invariant moment |

K-Nearest Neighbour |

|

[32] |

Pretrained VGG-16 and VGG-19 |

Fully-conencted layers + Softmax |

|

[40] |

Gray level co-occurence matrix |

three layers neural network backpropagation |

|

[28] |

Pretrained VGG-16 |

Various classifiers |

|

3.2.[28] ClasMsuiflitci taetxiotonn-bcaos-oecdcuArpenpcreoadceshcriptor |

SVM | |

This method can be divided into two parts, as shown in Table 2. The first part is the feature extraction process. The purpose of this process is extracting a unique key representation from the given data to improve the classification performance in the second part.

A texture-based approach dominates the feature extraction method. Suciati et al. [14] combine a fast discrete curvelet transform and the hue saturation value then the k-nearest neighbour placed to classify the features. Moreover, the statistical features from the grey level co-occurrence matrix are dominating the texture-based method. The statistical fea tures were computed from the grey level co-occurrence matrix, for example, contrast, correlation, energy, homogeneity, and entropy [15]. Nurhaida et al. [15] combined those features with feature reduction using principal component analysis and followed by a deep neural network. They also show how the batch normalization takes effect on boosting the deep neural network performance. This approach shows a significant upgrade from the standard deep neural network.

Deep learning methods also take parts in recognizing Batik’s patterns. Handhayani et al. [16] found that VGG16 [17] and ResNet [18] were underperformed. The deep of the network is not continuously increase the accuracy of such a model on a particular problem. A simple

Table 3 Generation and synthesize Batik’s pattern method

|

Authors |

Synthesize approach |

|

[25] |

GAN with captions |

|

[26] |

deep convolutional generative adversarial networks with modification |

|

[27] |

BatikGAN, BatikGAN with style, BatikGAN with style and local features |

Table 4 Publicly available of Batik dataset. Note: NoC refers to number of classes

|

No |

Dataset name |

Class number |

Total images |

|

1 |

Gultom dataset |

5 |

2.092 |

|

2 |

Minarno dataset |

50 |

300 |

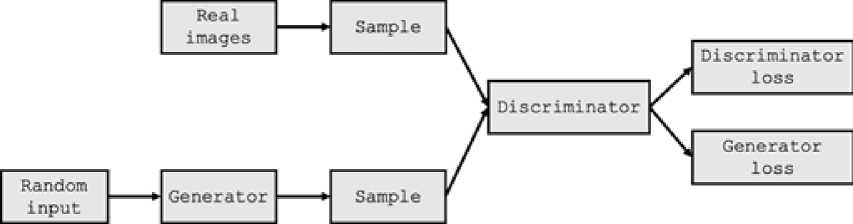

Figure 1 Generative adversarial networks architecture proposed in [26], [27]

convolutional neural network consisting of three convolution layers can place in the third position of accuracy in [16].

Moreover, in contrast with Handhayani results in [16], Rasyidi et al. [2] recently show that pre-trained networks can be used to recognise the ornament on Batik images. Rasyidi et al. [2] compared the accuracy, precision, and recall of AlexNet and various type of DenseNet, ResNet, SqueezeNet, and VGG. Gultom [19] and Arsa [20] also produce similar results where the pre-trained networks have outstanding performance when used on other domains.

Batik pattern synthesis has been a new challenge in processing batik images. This approach enabling the fusion of several ornaments or generate patterns with different style using artificial intelligence. As we can see in Table 3, all of the methods were built based on generative adversarial networks. This method has known its performance for generating images [21]–[27].

Figure 1 shows the general architecture used in [25]–[27]. Amalia et al. [25] and Abdurrahman et al. [26] use a standard generative adversarial network, as shown in Figure 1a. The difference is the construction of generator and discriminator. Then, Chu et al. [27] propose a different design of the generation method. Their method receives two patches of batik patterns as input to synthesis a new batik image.

BatikGAN [27] shows better performance compared with [25], [26]. The generated images present more details in the pattern where Amalia’s approach [25] and Abdurrahman’s approach [26] have more unstructured patterns if we scale the image. The three designed discriminators, such as pretrained VGG-19, global discriminator, and local discrimina tor, improve the quality of synthesis because each discriminator focuses on one task. For example, the global discriminator checks small local areas, and local discriminator determines the generated images’ details.

Table 5 Results of the previous research which was used Minarno dataset

|

Authors |

Method |

Performance | |

|

[28] |

Multi texton co-occurence descriptor |

acc: |

100% |

|

[20] |

pretrained VGG16 + radom forest |

acc: |

97.50 +- 2.32% |

|

[29] |

Multi texton histogram and probabilistic neural network |

acc: |

92% |

|

[30] |

Multi texton histogram + k-nearest neighbours |

acc: |

82% |

|

[31] |

Multi structure co-occurence descriptor |

avg precision: 81.47% | |

Table 6 Gultom dataset state of the art

|

Authors |

Method |

Accuracy |

|

[32] |

pretrained VGG19 |

89+-2.8% |

|

[19] |

pretrained VGG16 |

89+-7% |

|

[15] |

deep neural networks |

85.57% |

Previous research mostly built their dataset by crawling Batik images from search engines, for example, Google. We found that only two datasets are published freely. Table 4 shows some details of those datasets. Those datasets are varied in the number of classes and the total number of images. Gultom dataset provides more images per classes. The highest class number is provided in Minarno dataset with six images per class.

Table 5 shows the progress performance on developing classification method for Minarno dataset. The table presents that the Minarno dataset challenges have been solved since the current state of the art already on maximum performance [28]. Arsa et al. [20] use pretrained VGG16 here, combined with random forest and the result still competitive. For this pretrained network, we replace the Random Forest with Logistic Regression and produce 100% of accuracy consistently when the experiment is repeated.

Table 6 provides the current state of the art of Gultom dataset. Agastya et al. [32] approaches are the current best method. Even the accuracy is the same as Gultom [19], the standard deviation indicates that Agastya method [32] is stabler than Gultom method [19]. Moreover, a non-convolutional deep neural network can compete here, as shown by Nurhaida et al. [15] by incorporating the batch normalization process.

Table 6 provides the current state of the art of Gultom dataset. Agastya et al. [32] approaches is the current best method. Even the accuracy is the same as Gultom [19], the standard deviation indicates that Agastya method [32] is stabler than Gultom method [19]. Moreover, a non convolutional deep neural networks is able to compete here as shown by Nurhaida et al. [15] by incorporating the batch normalization process.

Various approaches have been applied to analyze Batik image using hand-crafted features or learned features like neural networks. The recognition task is still an open problem in Batik classification because tons of motifs are not yet compiled as a dataset. The batik motif generation is attractive because it is rarely done and in the early development of Batik patterns generation. This topic may enrich the Batik motif and can be used to learn unidentified pattern. The application of the batik motif generation and recognition through mobile devices can also be developed as an effort to preserve batik as a cultural heritage that is spread throughout Indonesia.

Acknowlegdement

This study was the results of the research project granted by Universitas Udayana entitled by "Pelestarian Jenis-Jenis Batik melalui Sistem Klasifikasi berbasis Deep Learning" on PUPS scheme.

References

-

[1] UNESCO, “Indonesian batik,” https://ich.unesco.org/en/RL/indonesian-batik-00170.

-

[2] M. A. Rasyidi and T. Bariyah, “Batik pattern recognition using convolutional neural network,” Bulletin of Electrical Engineering and Informatics, vol. 9, no. 4, pp. 1430–1437, Aug. 2020, doi: 10.11591/eei.v9i4.2385.

-

[3] A.HAAKE, “The Role of Symmetry in Javanese Batik Patterns,” International Series in Modern Applied Mathematics and Computer Science, vol. 17, pp. 815–826, 1989.

-

[4] L. Cantoni, S. de Ascaniis, and M. Gravari-Barbas, “Heritage and Sustainable Tourism,” The Role and Challenge of Information and Communication Technologies, 2018.

-

[5] D. A. Savita, I. K. G. D. Putra, and N. K. D. Rusjayanthi, “Public Sentiment Analysis of Online Transportation in Indonesia through Social Media Using Google Machine Learning,” JURNAL ILMIAH MERPATI, vol. 9, no. 2, pp. 153–164, 2021.

-

[6] I. P. Y. P. Yasa, I. K. G. D. Putra, and D. M. S. Arsa, “Augmented Reality Application

with Real Object Marker of Tanah Lot Temple,” JURNAL ILMIAH MERPATI, vol. 9, no. 1, pp. 46–57, 2021.

-

[7] A. A. Priyangka and I. M. S. Kumara, “ Classification Of Rice Plant Diseases Using the Convolutional Neural Network Method,” Lontar Komputer: Jurnal Ilmiah Teknologi Informasi, vol. 12, no. 2, pp. 123–129, 2021, doi: 10.24843/LKJITI.2021.v12.i02.p06.

-

[8] I. P. A. Dharmaadi, D. Made, and S. Arsa, “Studi Pustaka Sistem Pemantauan Jaringan

Distribusi Air Publik berbasis Internet of Things (IoT),” JURNAL ILMIAH MERPATI, vol. 8, no. 1, pp. 54–60, 2020.

-

[9] H. Fahmi, R. A. M. Zen, H. R. Sanabila, I. Nurhaida, and A. M. Arymurthy, “Feature selection and reduction for batik image retrieval,” in ACM International Conference Proceeding Series, Dec. 2016, pp. 47–52. doi: 10.1145/3033288.3033327.

-

[10] P. Pudil, J. Novovičová, and J. Kittler, “Floating search methods in feature selection,” Pattern Recognit Lett, vol. 15, no. 11, pp. 1119–1125, 1994.

-

[11] H. Abdi and L. J. Williams, “Principal component analysis,” Wiley interdisciplinary reviews: computational statistics, vol. 2, no. 4, pp. 433–459, 2010.

-

[12] I. Nurhaida, H. Wei, R. A. M. Zen, R. Manurung, and A. M. Arymurthy, “Texture fusion for batik motif retrieval system,” International Journal of Electrical and Computer Engineering (IJECE), vol. 6, no. 6, pp. 3174–3187, 2016.

-

[13] H. Prasetyo, W. Wiranto, W. Winarno, U. Salamah, and B. Harjito, “Batik image retrieval using ODBTC feature and particle swarm optimization,” Journal of Telecommunication, Electronic and Computer Engineering (JTEC), vol. 10, no. 2–4, pp. 71–74, 2018.

-

[14] N. Suciati, A. Kridanto, M. F. Naufal, M. Machmud, and A. Y. Wicaksono, “Fast discrete curvelet transform and HSV color features for batik image clansificotlon,” in 2015 International Conference on Information & Communication Technology and Systems (ICTS), 2015, pp. 99–104.

-

[15] I. Nurhaida, V. Ayumi, D. Fitrianah, R. A. M. Zen, H. Noprisson, and H. Wei, “Implementation of deep neural networks (DNN) with batch normalization for batik pattern recognition.,” International Journal of Electrical & Computer Engineering (20888708), vol. 10, 2020.

-

[16] T. Handhayani, J. Hendryli, and L. Hiryanto, “Comparison of shallow and deep learning models for classification of Lasem batik patterns,” in 2017 1st International Conference on Informatics and Computational Sciences (ICICoS), 2017, pp. 11–16.

-

[17] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.

-

[18] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

-

[19] Y. Gultom, A. M. Arymurthy, and R. J. Masikome, “Batik classification using deep convolutional network transfer learning,” Jurnal Ilmu Komputer dan Informasi, vol. 11, no. 2, pp. 59–66, 2018.

-

[20] D. M. S. Arsa and A. A. N. H. Susila, “VGG16 in Batik Classification based on Random Forest,” in 2019 International Conference on Information Management and Technology (ICIMTech), 2019, vol. 1, pp. 295–299.

-

[21] I. Goodfellow et al., “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

-

[22] A. Radford, L. Metz, and S. Chintala, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks,” in 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, 2016. [Online]. Available: http://arxiv.org/abs/1511.06434

-

[23] Q. Cheng and X. Gu, “Cross-modal Feature Alignment based Hybrid Attentional Generative Adversarial Networks for Text-to-image Synthesis,” Digital Signal Processing, p. 102866, 2020.

-

[24] Y. Chen et al., “Person Image Synthesis through Siamese Generative Adversarial Network,” Neurocomputing, 2020.

-

[25] A. N. Amalia, A. F. Huda, D. R. Ramdania, and M. Irfan, “Making a Batik Dataset for Text to Image Synthesis Using Generative Adversarial Networks,” in 2019 IEEE 5th International Conference on Wireless and Telematics (ICWT), 2019, pp. 1–7.

-

[26] M. Abdurrahman, N. H. Shabrina, and D. K. Halim, “Generative Adversarial Network Implementation for Batik Motif Synthesis,” in 2019 5th International Conference on New Media Studies (CONMEDIA), 2019, pp. 63–67.

-

[27] W.-T. Chu and L.-Y. Ko, “BatikGAN: A Generative Adversarial Network for Batik Creation,” in Proceedings of the 2020 Joint Workshop on Multimedia Artworks Analysis and Attractiveness Computing in Multimedia, 2020, pp. 13–18.

-

[28] A. E. Minarno, Y. Azhar, F. D. S. Sumadi, and Y. Munarko, “A Robust Batik Image Classification using Multi Texton Co-Occurrence Descriptor and Support Vector Machine,” in 2020 3rd International Conference on Intelligent Autonomous Systems (ICoIAS), 2020, pp. 51–55.

-

[29] A. E. Minarno, Y. Munarko, A. Kurniawardhani, and F. Bimantoro, “Classification of Texture Using Multi Texton Histogram and Probabilistic Neural Network,” in IOP Conf. Ser. Mater. Sci. Eng, 2016, vol. 105, no. 1, p. 12022.

-

[30] A. E. Minarno, A. S. Maulani, A. Kurniawardhani, F. Bimantoro, and N. Suciati, “Comparison of methods for batik classification using multi texton histogram,” Telkomnika, vol. 16, no. 3, pp. 1358–1366, 2018.

-

[31] A. E. Minarno, A. Kurniawardhani, and F. Bimantoro, “Image retrieval based on multi structure co-occurrence descriptor,” Telkomnika, vol. 14, no. 3, pp. 1175–1182, 2016.

-

[32] I. M. A. Agastya and A. Setyanto, “Classification of Indonesian Batik Using Deep Learning Techniques and Data Augmentation,” in 2018 3rd International Conference on Information Technology, Information System and Electrical Engineering (ICITISEE), 2018, pp. 27–31.

-

[33] I. Nurhaida, A. Noviyanto, R. Manurung, and A. M. Arymurthy, “Automatic Indonesian’s batik pattern recognition using SIFT approach,” Procedia Computer Science, vol. 59, no. 5, pp. 567–576, 2015.

-

[34] C. S. K. Aditya, M. Hani’ah, R. R. Bintana, and N. Suciati, “Batik classification using neural network with gray level co-occurence matrix and statistical color feature extraction,” in 2015 International Conference on Information & Communication Technology and Systems (ICTS), 2015, pp. 163–168.

-

[35] A. A. Kasim, R. Wardoyo, and A. Harjoko, “Batik classification with artificial neural network based on texture-shape feature of main ornament,” International Journal of Intelligent Systems and Applications, vol. 11, no. 6, p. 55, 2017.

-

[36] A. A. Kasim, R. Wardoyo, and A. Harjoko, “The selection feature for batik motif classification with information gain value,” in International Conference on Soft Computing in Data Science, 2017, pp. 106–115.

-

[37] F. U. Karimah and A. Harjoko, “Classification of batik kain besurek image using speed up robust features (SURF) and gray level co-occurrence matrix (GLCM),” in International Conference on Soft Computing in Data Science, 2017, pp. 81–91.

-

[38] A. Y. Wicaksono, N. Suciati, C. Fatichah, K. Uchimura, and G. Koutaki, “Modified convolutional neural network architecture for batik motif image classification,” IPTEK Journal of Science, vol. 2, no. 2, 2017.

-

[39] R. E. Caraka, T. W. Cenggoro, B. Pardamean, and others, “Batik parang rusak detection using geometric invariant moment,” in 2018 Indonesian Association for Pattern Recognition International Conference (INAPR), 2018, pp. 71–74.

-

[40] J. Kusanti and A. Suprapto, “Combination of Otsu and Canny Method to Identify the Characteristics of Solo Batik as Surakarta Traditional Batik,” in 2019 2nd International Conference of Computer and Informatics Engineering (IC2IE), 2019, pp. 63–68.

Batik’s Pattern Recognition and Generation: Review and Challenges (Dewa Made Sri Arsa) 121

Discussion and feedback