Kekarangan Balinese Carving Classification Using Gabor Convolutional Neural Network

on

LONTAR KOMPUTER VOL. 13, NO. 1 APRIL 2022

DOI : 10.24843/LKJITI.2022.v13.i01.p01

Accredited Sinta 2 by RISTEKDIKTI Decree No. 158/E/KPT/2021

p-ISSN 2088-1541

e-ISSN 2541-5832

Kekarangan Balinese Carving Classification Using Gabor Convolutional Neural Network

I Putu Bagus Gede Prasetyo Raharjaa1, I Made Suwija Putraa2 , Tony Leb3

a

Department of InformationTechnology, Udayana University

Bukit Jimbaran, Bali, Indonesia 1prasetyo.raharja@student.unud.ac.id (Corresponding author)

b Department of Electrical and Computer Engineering, University of New Orleans 2000 Lakeshore Dr, New Orleans, LA70148, United States

Abstract

Balinese traditional carvings are Balinese culture that can easily be found on the island of Bali, starting from the decoration of Hindu temples and traditional Balinese houses. One of the types of Balinese traditional carving ornaments is Kekarangan ornament carving. Apart from the many traditional Balinese carvings, Balinese people only know the shape of the carving without knowing the name and characteristics of the carving itself. Lack of understanding in traditional Balinese carving is caused by the difficulty of finding sources of materials to study traditional Balinese carvings. A traditional Kekarangan Balinese carving classification system can help Balinese people to identify classes of traditional Balinese carving. This study used the Gabor CNN method. The Multi Orientation Gabor Filter is used in feature extraction and image augmentation, coupled with the Convolutional Neural Network method for image classification. The usage of the Gabor CNN method can produce the highest image classification accuracy of 89%.

Keywords: Balinese Carving, Convolutional Neural Network, Computer Vision, Gabor Filter, Machine Learning

Bali is one of the tourist destinations that still preserve its traditional culture. One of Bali’s traditional cultures is Balinese traditional carving. Balinese traditional carving comes from the imagination abilities and creativity of the carver toward the natural shape. Balinese traditional carvings can be found in Hindus Temple and Balinese traditional houses. One type of Balinese’s traditional carving ornament is Kekarangan. Animals and plants inspired the shape of the Kekarangan ornaments. Kekarangan carving ornament can consist of several classes of other carving ornaments. The different ability of each carver to interpret natural shape into carving makes Kekarangan Balinese traditional carving unique. The classification of Kekarangan Balinese traditional carving can help Balinese people identify the Balinese traditional carving class despite the uniqueness of each carving. It also creates digital documentation to preserve Balinese’s traditional culture. It can be one of the media for future generations to learn Balinese traditional carving, especially the Kekarangan ornament.

The classification of Kekarangan Balinese traditional carving has been done before by manually identifying the characteristics of lines, dots, shape, and accent of the carving. The pattern classification of Kekarangan Balinese traditional carving can be performed automatically using computer vision technology and deep learning. The Gabor filter is a feature extractor that has been used in the computer vision field because of its excellent properties in the spatial domain . A Convolutional Neural Network (CNN) is a popular deep learning classification method. Recently, CNN has been shown to carry the process of pattern recognition automatically on a large amount of data with extraordinary power. Our research proposed the CNN method and Gabor Filter to classify three classes of Kekarangan Balinese traditional carving using 300 RGB images with 256 x 256 shapes each. These three classes are Karang Gajah, Karang Daun, and Karang Goak. We use image augmentation to overcome the limited data problem. The model evaluation of the neural network showed the highest accuracy of 89%.

Artificial Intelligence (AI) has been grown rapidly in recent years. It shows that collaboration in the AI field is becoming more common, and the scope of research projects is greater [1]. Convolutional Neural Network method has been successfully applied in some research on computer vision problems, especially in image classification and object detection problems. In this section, we discuss the supporting studies on this research.

Rahul Chauhan et al. [2] used simple CNN models with data augmentation to perform image recognition on the MNIST and CIFAR-10 datasets. The research proved that data augmentation could increase the accuracies of the model in a large dataset. The data augmentation techniques used were mirroring, random cropping, rotation, and color shifting. The accuracy of the CNN model on MNIST is 99.6% and on CIFAR-10 is 80.17%.

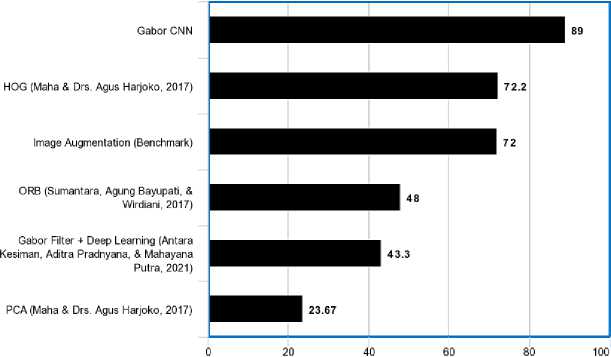

Research by Maha et.al [3] has shown that Computer Vision and Machine Learning methods can use for classifying traditional Balinese carvings. The computer vision method used in the feature extraction step is the HOG feature extraction method and the PCA feature extraction method, both of which will be used as training data with the LVQ method. The results of the recognition of the test dataset obtained an average accuracy of 72.2% and the PCA feature extraction method of 23.67%. The hyperparameters used in the LVQ model are learning rate with a value of 0.001 and epoch with 1000 value.

Another research on the classification of traditional Balinese carvings was carried out by Suman-tara et.al [4]. Their research shows the identification method of traditional Balinese carvings using the ORB (Oriented FAST and Rotated BRIEF) feature extraction method and classification using the Hamming Brute Force method. The Oriented FAST and Rotated BRIEF methods on its application to the Bali Carving Recognition application obtains a recognition accuracy of 48%. Classification success is influenced by several factors, such as the quality of the reference image, lighting, and distance.

The use of the Gabor Filter feature extraction method and classification method using Deep Learning on a dataset of traditional Balinese carvings has been carried out by Kesiman et.al [5]. The dataset used in the form of traditional Balinese carving images with a number of classes of 18 classes and a total number of data is 258 images. The feature extraction method used is the Gabor Filter plus the Multilayer Perceptron method with the Backpropagation algorithm. The classification model has an accuracy of 43.3%.

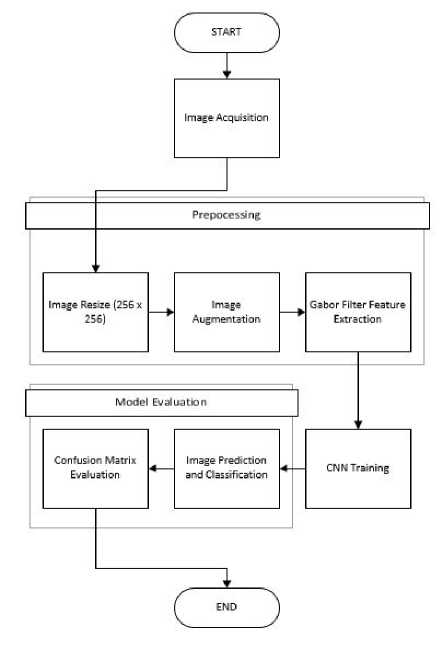

The flow overview of the system is shows on Figure 2. The image datasets used in this research were taken by the researcher with Samsung Galaxy A50 25 MP Main Camera in Gianyar, Badung, and Buleleng district in Bali. The datasets consist of 249 images across three classes. Figure 1 shows image example of 3 class of Kekarangan Balinese traditional carving, consist of Karang Daun that resembles flower and leaf, Karang Gajah that resembles elephant, and Karang Goak that resembles bird. From the Table 2 we can see detailed info about the number of images of each class.

Figure 1. Sample images from each carving classes

Table 1. Image acquisition details

|

Class Name |

Number of Images |

|

Karang Daun |

86 |

|

Karang Goak |

88 |

|

Karang Gajah |

75 |

In the processing step, the raw image from the camera turned into the Gabor filter feature image dataset. The dataset will be split into the testing dataset, validation dataset, and training dataset. From the dataset, we trained the CNN model to classify the image using the training dataset. Also, validated the step with the validation dataset. The evaluation step evaluated the performance by predicting images from the testing dataset and build a confusion matrix based on the prediction result.

The number of datasets given during the training process of CNN model is one of the factor that determine performance of neural network. A simple neural network can learn from a set of images dataset to do task such as character recognition, image segmentation, and image classification [6]. However, it required an enormous amount of labeled data for the model to perform computer vision task with high performance [7]. Data augmentation overcomes the lack of data training by artificially inflating the training set with label-preserving transformations.

Recently there has been extensive use of generic data augmentation to improve Convolutional Neural Network (CNN) task performance. Transformation carries out in geometric methods such as zoom, flipping, rotation, and cropping schemes, then in photometric methods such as color jittering, edge enhancement, and fancy PCA [8]. New training samples can be created using prior knowledge on transformation-invariance properties to improve the learning of a machine. An attractive characteristic of these augmentation methods is their ability to be combined with multiple image augmentation method [9]. The data augmentation method used in this research was horizontal flip and image rotation with 20o and -20o, whics is the optimal rotation degree that still preserve the label of the data [10]. We will apply data augmentation on the raw dataset until the number of all classes is balanced. From the image augmentation process, we generated total of 300 images with 100 images in each class.

Figure 2. Proposed system workflow

Table 2. Result of Image Augmentation

|

Class Name |

Number of Images |

|

Karang Daun |

100 |

|

Karang Goak |

100 |

|

Karang Gajah |

100 |

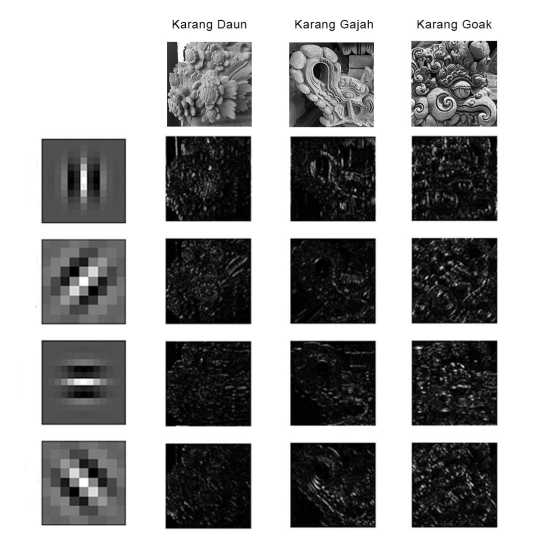

Feature extraction is a significant component of object detection and image classification [11]. Gabor filter was used to extract features from the image. Although CNN can perform feature extraction using multiple layers of feature detectors [9], The Gabor filter can help to extract features of some spatial information that cannot be learned. General approaches on feature extraction exist but have problems in practice since they require precise segmentation and uniform lighting. Gabor filter can overcome this limitation since Gabor features can preserve pose information in the feature space [12]. We used the Gabor filter with four directions, namely 0o, 45o, 90o, 134o, and with 0.1 frequency in this paper. Gabor feature image of each class and each directions is shown at Figure 5. Equation 1 shows the complex Gabor function used to build the Gabor filter feature extractor in this research. From the process of Gabor Filter Feature Extraction, each image generated four different feature images making a total of 1200 images as training and testing dataset.

xl2 + γ2 y'2 χ'

(1)

(2)

g(x, y;λ, θ, ψ, σ, γ) = eχp(--^—) eχp((i(2φ j + ψ))

x' = x cos θ + y sin θ

(3)

y0 = —x sin θ + y cos θ

Figure 3. Gabor image result from different filter orientation

Convolutional Neural Network (CNN) is one kind of neural network and development of Multilayer Perceptron (MLP). CNN is an efficient recognition algorithm that widely uses in pattern recognition and image processing. Convolutional Neural Network use Convolution Layer and Pooling Layer to perform feature extraction of an image. Transfer learning is one tool that can solve the problem of insufficient training data while training CNN model [13]. Research in [14] proved that training a small sample of data on a pre-trained model with fine-tuning has a better result, or in the worst case, performed the same as a CNN trained from scratch. Researchers have implemented deep learning models, as VGG16 [15]. MobileNet [16], GoogLeNet [17], and ResNet [18]. We use the MobileNet model to speed up our training process combined by 1024x1024 as a fine-tuning layer and softmax layer with three outputs as a classifier in this research. Compared to other models, MobileNet has excellent performance and is suitable to use on the mobile platform [19].

Confusion matrices can be used to evaluate performance of deep learning model on image classification task. From the confusion matrix result we can analyze and identifying the effectiveness of the classification task on each training and testing class. In this research, we calculated accuracy, precision, recall, and f score metrics based on the confusion matrix. The following equation 4-7 is used to calculate confusion matrix metrics. l is total class, tp is true positive of class, tn is true negative of class, fn is false negative of class, fp is true positive of class , and β is weighted harmonic mean of precision and recall [20].

l

AverageAccuracy =

Σ i=1

tpi+tni tpi+tni+fpi+fni

l

(4)

PrecisionM =

l P

i=1

tpi tpi+fpi

l

RecallM =

Σ i=1

tpi tpi+fni

l

(5)

(6)

FScoreM =

(β2 + 1)PrecisionM RecallM β 2PrecisionM + RecallM

(7)

In this research, we will conduct six types of training and model evaluation scenarios. The detail of each scenario can be seen in Table 3. We will use two types of the dataset to evaluating the performance of our Gabor CNN model. The first dataset is the dataset that consists of Kekarangan carving images that resulted from the image augmentation process without adding the Gabor filter feature extraction step. This dataset will be our benchmark model. The second dataset is the dataset that consists of Kekarangan carving image that has passed the image augmentation process and Gabor filter feature extraction step. We also fine-tuned our model optimizer function using three optimizers which are Adam, RMSProp, and SGD. We used 50 epoch and 1x10-5 learning rate as hyperparamater for all scenarios. The detail of our training and model evaluation scenario can be seen on Table 7.

Table 3. Image acquisition details

|

Class Name |

Images Acquired |

Benchmark Dataset |

Gabor Dataset |

|

Karang Daun |

86 images |

100 images |

400 images |

|

Karang Goak |

88 images |

100 images |

400 images |

|

Karang Gajah |

75 images |

100 images |

400 images |

Table 4. Scenario Details

|

Scenario |

Dataset |

Optimizer |

|

1 |

Benchmark Datast |

Adam |

|

2 |

Bencmark Dataset |

RMSProp |

|

3 |

Benchmark Dataset |

SGD |

|

4 |

Gabor Datast |

Adam |

|

5 |

Gabor Dataset |

RMSProp |

|

6 |

Gabor Dataset |

SGD |

Kekarangan Balinese traditional classification was conducted on machine using using Intel Core TM i7 2.60 GHz processor, 8 GB RAM, NVIDIA® GeForce® GTX 950M 4GB GDDR5 VRAM , Python 3.7 with Keras and Tensorflow 1.4 library. In this section, we discuss the result of our training and model evaluation scenarios..

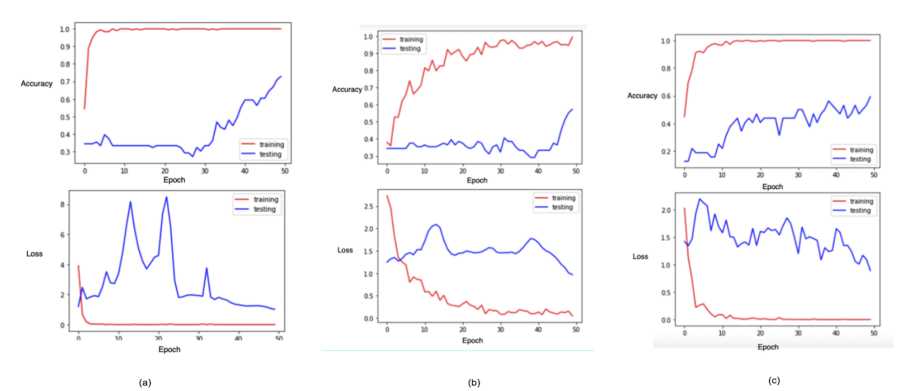

In this scenario, we conducted training with the dataset consists of Kekarangan carving images that resulted from the image augmentation process without adding the Gabor filter feature extraction step. We split the dataset into training, testing, and validation dataset. The details of each

dataset can be seen in 7. The highest training obtained by the model is 100%, and the highest validation accuracy of 76% from the model trained with Adam optimizer. Figure 4shows the accuracy and loss graph of every model trained with a benchmark dataset on each epoch. The graph shows that validation accuracy and loss value are not stable. The model with a high or unstable loss value tends to have an unsteady performance on classifying images that not include in the training or validation dataset. To further evaluate our model, we conduct testing step by predicting all images on the testing dataset and analyze the result using a confusion matrix. From the testing step, the model acquired the highest accuracy of 72%, highest recall of 72%, highest precision of 76%, and highest F1 score of 71%.

Figure 4. Training result graph

on benchmark dataset: (a) Adam Optimizer, (b) RMSProp Opti-

Table 5. Split Dataset Detail for Benchmark Dataset

|

Class Name |

Training |

Validation |

Testing |

|

Karang Daun |

70 images |

24 images |

6 images |

|

Karang Goak |

70 images |

24 images |

6 images |

|

Karang Gajah |

70 images |

24 images |

6 images |

mizer, (c) SGD Optimizer

Table 6. Gabor Filter Performance Comparison

|

Metrices |

Without Gabor Filter |

With Gabor Filter | ||||||||||

|

Adam |

RMSProp |

SGD |

Adam |

RMSProp |

SGD | |||||||

|

Accuracy |

72 |

% |

61 |

% |

58 |

% |

89 |

% |

86 |

% |

87 |

% |

|

Recall |

72 |

% |

61 |

% |

58 |

% |

89 |

% |

86 |

% |

87 |

% |

|

Precision |

76 |

% |

66 |

% |

57 |

% |

89 |

% |

87 |

% |

87 |

% |

|

F1 Score |

71 |

% |

60 |

% |

57 |

% |

89 |

% |

86 |

% |

87 |

% |

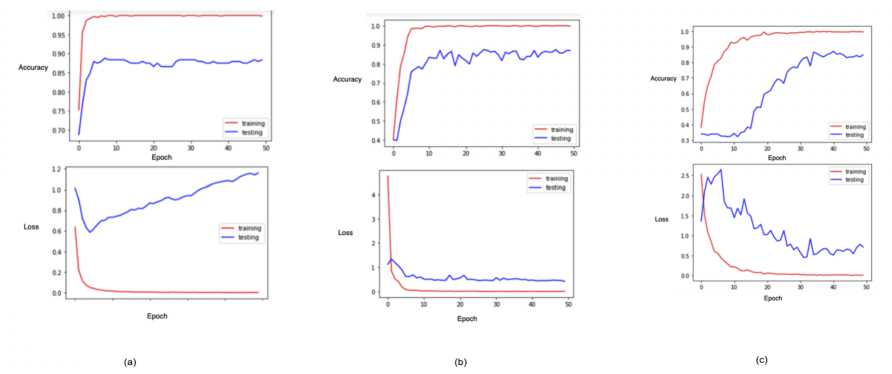

In this scenario, we conducted training with a dataset consist of Kekarangan carving image that has passed the image augmentation process and Gabor filter feature extraction step. We split the dataset into training, testing, and validation dataset. The details of each dataset can be seen in Table 5. The highest training obtained by the model is 100% from the model trained by RMSProp Optimizer and the highest validation accuracy of 86.25% from the model trained with

Figure 5. Training result graph on Gabor dataset: (a) Adam Optimizer, (b) RMSProp Optimizer, (c) SGD Optimizer

Table 7. Split Dataset Detail for Gabor Dataset

Class Name Training Validation Testing

Karang Daun

Karang Goak

280 images 96 images 24 images

280 images 96 images 24 images

Karang Gajah 280 images 96 images 24 images

SGD and Adam optimizer . Figure 5 shows accuracy and loss graph of each model that trained with benchmark dataset on each epoch. From the graph, we see the model trained with the Gabor with Gabor dataset tends to be more stable and obtain more accuracy than the model trained with the dataset without Gabor filter feature extraction. To further evaluate our model, we conduct the testing step by predicting all images on the testing dataset and analyze the result using a confusion matrix. The model acquired the highest accuracy of 89%, the highest recall of 89%, the highest precision of 89%, and the highest F1 score of 89% from the testing step. The detailed result of Gabor Filter performance can be seen at Table 6. These results indicated that the Gabor filter improves model accuracy and tends to be more stable than the model that only trained on image data with geometric transformation. Figure 6 shows comparison of our proposed method with another classification method for Balinese traditional carving clasification.

In this paper, we proposed a novel method for the classification of Kekarangan Balinese traditional carving. Our research shows the process of Kekarangan Balinese traditional carving classification using Convolutional Neural Network (CNN) from Gabor filter image. The researcher established its dataset for this research. The image obtained carries out through the process of data augmentation. Then, it is used to increase the amount of dataset used on training and testing steps, and the Gabor filter is used to extracting features from the image. Confusion matrix matrices is used to carry out the evaluation steps of the model.

We conduct six training and model evaluation scenarios by using the benchmark dataset and Gabor dataset. Our model trained with the Gabor dataset shows increased accuracy by up to 17% from our benchmark dataset and 16.7% from the previous method used in classifying Kekarangan engraving images. Based on these results, our novel methods can use to further enhance the model performance on the Balinese traditional carving classification.

Accuracy (%)

Figure 6. Comparison with different Balinese traditional carving classification method

References

-

[1] I. M. Widiatmika, I. Piarsa, and A. Syafiandini, “Recognition of the baby footprint characteristics using wavelet method and k-nearest neighbor (k-nn),” Lontar Komputer : Jurnal Ilmiah Teknologi Informasi, vol. 12, no. 1, pp. 41–52, 2021. [Online]. Available: https://ojs.unud.ac.id/index.php/lontar/article/view/70638

-

[2] R. Chauhan, K. K. Ghanshala, and R. C. Joshi, “Convolutional neural network (cnn) for image detection and recognition,” in 2018 First International Conference on Secure Cyber Computing and Communication (ICSCCC), Dec 2018, pp. 278–282.

-

[3] I. M. A. Maha, “Pengenalan pola motif ukiran bali menggunakan histogram of oriented gradient (hog) dan learning vector quantization (lvq),” Master’s thesis, Institut Teknologi Surabaya, 2017.

-

[4] Sumantara, Agung Bayupati, and Wirdiani, “Rancang Bangun Aplikasi Pengenalan Ukiran Bali dengan Metode ORB,” Jurnal Ilmiah Merpati, 2017.

-

[5] Antara Kesiman, Aditra Pradnyana, and Mahayana Putra, “IDENTIFIKASI CITRA UKIRAN ORNAMEN TRADISIONAL BALI DENGAN METODE MULTILAYER PERCEPTRON,” SIN-TECH, 2021.

-

[6] Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, Nov 1998.

-

[7] Yann LeCun, Corinna Cortes, and Christopher J.C. Burges, “The mnist database of handwritten digits,” http://yann.lecun.com/exdb/mnist/, 2013, accessed: 2019-12-3.

-

[8] L. Taylor and G. Nitschke, “Improving deep learning with generic data augmentation,” in 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Nov 2018, pp. 1542–1547.

-

[9] A. Krizhevsky, I. Sutskever, and G. Hinton, “Imagenet classification with deep convolutional neural networks,” Neural Information Processing Systems, vol. 25, 01 2012.

-

[10] Connor Shorten and Taghi M. Khoshgoftaar , “A survey on Image Data Augmentation for Deep Learning,” Journal of Big Data, Jul 2019.

-

[11] H. Yao, L. Chuyi, H. Dan, and Y. Weiyu, “Gabor feature based convolutional neural network for object recognition in natural scene,” in 2016 3rd International Conference on Information Science and Control Engineering (ICISCE), July 2016, pp. 386–390.

-

[12] J. . Kamarainen, V. Kyrki, and H. Kalviainen, “Invariance properties of gabor filter-based features-overview and applications,” IEEE Transactions on Image Processing, vol. 15, no. 5, pp. 1088–1099, May 2006.

-

[13] C. Tan, F. Sun, T. Kong, W. Zhang, C. Yang, and C. Liu, “A Survey on Deep Transfer Learning,” arXiv e-prints, p. arXiv:1808.01974, Aug 2018.

-

[14] N. Tajbakhsh, J. Y. Shin, S. R. Gurudu, R. T. Hurst, C. B. Kendall, M. B. Gotway, and J. Liang, “Convolutional neural networks for medical image analysis: Full training or fine tuning?” IEEE Transactions on Medical Imaging, vol. 35, no. 5, pp. 1299–1312, May 2016.

-

[15] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv e-prints, p. arXiv:1409.1556, Sep 2014.

-

[16] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going Deeper with Convolutions,” arXiv e-prints, p. arXiv:1409.4842, Sep 2014.

-

[17] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, and H. Adam, “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications,” arXiv e-prints, p. arXiv:1704.04861, Apr 2017.

-

[18] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” arXiv e-prints, p. arXiv:1512.03385, Dec 2015.

-

[19] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, and H. Adam, “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” CoRR, vol. abs/1704.04861, 2017. [Online]. Available: http://arxiv.org/abs/1704.04861

-

[20] M. Sokolova and G. Lapalme, “A systematic analysis of performance measures for classification tasks,” Information Processing & Management, vol. 45, no. 4, pp. 427 – 437, 2009.

10

Discussion and feedback