Detecting the Ripeness of Harvest-Ready Dragon Fruit using Smaller VGGNet-Like Network

on

Journal of Electrical, Electronics and Informatics, Vol. 3 No. 2, August 2019

35

Detecting the Ripeness of Harvest-Ready Dragon Fruit using Smaller VGGNet-Like Network

I Made Wismadi1, Duman Care Khrisne*2, I Made Arsa Suyadnya3

-

1,2,3Department of Electrical Engineering, Faculty of Engineering,

Udayana University, Badung, Indonesia

Email: duman@unud.ac.id

Abstract—This study has a purpose to develop an application to detect the ripeness of the dragon fruit with the deep learning approach using the Smaller VGGNet-like Network method. In this study, the dragon fruit are classified into two classes: ripe or ready for harvest and still raw, by using the Convolutional Neural Network (CNN). The training process utilize the hard packages in python with the backend tensorflow. The model in this research is tested using the confusion matrix and ROC method with the condition that 100 new data are tested. Based on the test conducted, the level of accuracy in classifying the ripeness of the dragon fruit is 91%, and the test using 20 epoch, 50 epoch, 100 epoch, and 500 epoch produced an AUROC value of 0,95.

Keywords—Dragon Fruit, Deep Learning, Smaller VGGNet-like Network.

-

I. INTRODUCTION

In Indonesia, the dragon fruit has become popular since the year of 2000. Several regions that produce dragon fruit in Indonesia are Jember, Malang, Pasuruan, and also Bali. The ripeness of the dragon fruit is among the most important factors which must be given attention. The dragon fruit is a non-climacteric fruit (the type of fruit which will not become ripe if it is harvested in an unripe condition). Additionally, dragon fruit do not require substances such as carbide, ethylene gas, or CO2 to hasten the ripening process. Consequently, the dragon fruit must be harvested when they are fully ripe so that the quality of the fruit can be maintained after it is harvested and even when it is stored [1]. Today, the dragon fruit farmers still use the manual method to determine whether the fruit is ready to harvest. The dragon fruit farmers must count the time from when the flowers first bloom until it bears fruit, to determine the right time to harvest it.

This study tried to overcome the problem of detecting the ripeness of harvest-ready dragon fruit by building an application using deep learning approach and smaller VGGNet-Like Network method. The deep learning approach classifies data in accordance with the label given in a training session after studying the extracted feature from each data repeatedly in order to differentiate one label from other labels. In the data testing session, the data tested can be analyzed in accordance with the specific characteristics of each label from the training session results.

-

II. RELATED WORK

To better understand about the ripeness of dragon fruit, research conducted by [2] regarding the influence of time of harvest and storage temperature on the quality and the storability of the super-red dragon fruit (Hylocereus Costaricensis) revealed that there is a very significant interaction between the harvest time, the storage room

temperature, and the cumulative yield loss, freshness, and hardness. Another research was conducted by [3] in which a dragon fruit ripeness quality assessment tool was designed using the image processing technique with the HSV image segmentation method. The test result of the dragon fruit assessment process using the HSV image segmentation method, after conducting 100 trials showed a success percentage of 86% and the time needed to assess one dragon fruit is between 15 to 22 seconds. Research [3] uses a machine learning approach in an attempt to determine the ripeness of dragon fruit, but the use of color features (HSV) triggers a weakness, dragon fruit cannot be checked in its natural state.

Several studies [4], [5] state that the use of deep learning is very good for overcoming these machine learning problems. Based on these issues and previous studies, an application to determine the ripeness of the dragon fruit is developed in this study by utilizing the deep learning classification approach with the VGGNet-like Network method. For this, the deep learning approach is needed to analyze the picture of the dragon fruit’s surface and classify the dragon fruit into the category of ripe or not ripe with an optimal accuracy. The deep learning approach with the convolutional neural network architecture and the Smaller VGGNet-like Network method are used in this research to develop the application to detect the ripeness level of the dragon fruit.

-

III. PROPOSED APPROACH

This study proposed the use of CNN model to classify the harvest-ready of dragon fruit and use a smaller VGGNet-Like Network to create the model. We use deep learning approach which is a field in machine learning that utilize the artificial neural network to solve an issue using a large dataset. A deep (many layers) artificial neural network application can be implemented on an existing machine learning algorithm so that computers now can learn at a great speed, accuracy and scale. Feature engineering is one of the main features in deep learning to extract useful

patterns from the data which will assist the model in differentiating classes.

Fig. 1. Ripe and Ready for Harvest Classification Results

In this study, two processes are performed on the system, namely the training process and the test process. In the training process, there will be pictures which are used to develop the model. The training pictures have their own labels because at the start of the process, the data (pictures) are manually categorized into ready for harvest and not ready for harvest with the help of experts (cultivators). The training pictures are then directed to the VGGNet-like features extraction. After this step, the system will be trained through several epochs to produce a model called the VGGNet-like Model. If the training process has been done, the next step is the labelling process. It starts with the picture which will be extracted into the VGGNet-like features extraction, and afterwards directed to the VGGNet-like Model which was previously produced through the training process. This model contains the VGGNet which has the task to predict whether the picture of the dragon fruit is categorized as ready for harvest or not. Lastly, is the process of producing pictures with tables.

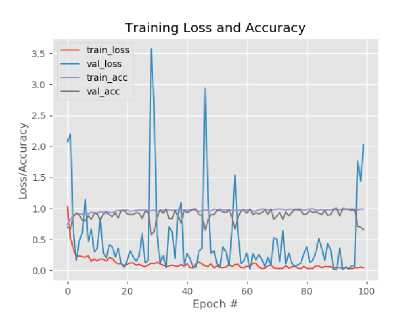

Fig. 2. Training Loss and Accuracy for 20 Epochs

To determine the performance of the application model produced, the labeling accuracy of the validation picture is tested. The labeled test pictures are directed to the VGGNet-like Model which were produced in the training process. The system will predict whether the fruit in the test pictures are ready to harvest or not, producing labeled pictures. Afterwards, the labeled pictures will be compared with the test pictures, if the labels are the same, then the performance of the model can be said to be accurate. However, if the label results are different, it can be said that it is inaccurate. The number of pictures used in the test process are 20% from the total amount of pictures provided.

Fig. 3. Training Loss and Accuracy for 50 Epochs

-

IV. RESULTS

The main process in creating this model is started with the data training process. This process has a purpose to form the model which will be used for data testing. The parameter used to measure the model’s level of success is the accuracy value. The accuracy value of the model can be determined by conducting the data testing. The training process used the hard packages in python with the backend tensorflow.

Fig. 1 Below are the result examples of the unripe category which were attained after the classification process conducted on the available test data. From Fig. 1, it can be seen that the program output, through the classification process, shows that the dragon fruit is classified as ripe. If the dragon fruit pictures of this class are classified as ripe, the results are correct, but if it is classified as unripe, the results are incorrect. The truth percentage of each picture’s classification results are also displayed.

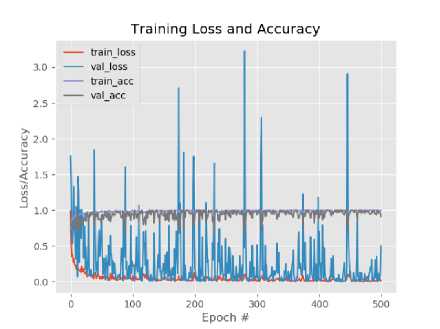

Fig. 4. Training Loss and Accuracy for 100 Epochs

After conducting several processes in the Convolutional Neural Network (CNN) algorithm, the training and validation results are attained. This process utilize iterations with a total of 20 epochs, 50 epochs, 100 epochs, and 500 epochs and a learning rate value of 0.001. Displayed below is the comparison of all the epochs used in the training process.

TABLE I. ACCURACY BASED ON EPOCH

|

Epoch |

Accuracy Validation |

Loss Validation |

Time (Seconds) |

|

20 |

0,8889 |

0,4989 |

1400 |

|

50 |

0,9667 |

0,1441 |

4000 |

|

100 |

0,6556 |

2,0282 |

7200 |

|

500 |

0,9111 |

0,4997 |

36000 |

Fig. 5. Training Loss and Accuracy for 100 Epochs

Based on Table I and by using a learning rate value of 0,001, decently high accuracies were attained, reaching up to 0,9667. From assessing the table, it can be concluded that the closer the value used is to 50 epoch, the higher the accuracy of the testing results.. However, when the epoch is increased to a value above 50, the accuracy experiences a fall. This may be due to the number of epochs that are too high which may also influence the number of dataset.

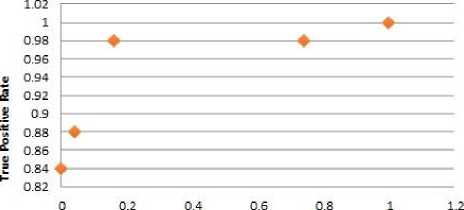

From the confusion matrix test results for 20 epoch, 50 epoch, 100 epoch, and 500 epoch, the FPR value and TPR value are attained and displayed in Table II. Afterwards, using the FPR and TPR values, the receiver operating characteristic curve is formed and shown in Fig.5.

TABLE II. FPR AND RECALL (TPR) OF EACH EPOCH

|

Epoch |

20 |

50 |

100 |

500 |

|

FPR |

0,04 |

0,16 |

0,74 |

0 |

|

TPR |

0,88 |

0,98 |

0,98 |

0,84 |

Fake Positive Rate

Fig. 5. ROC Curve

To attain the AUROC, the area is calculated using the trapezoid method which is shown in the equation below.

Σn-1 1

∙-1 2 (yli+y2i)

× ωi

AUROC = (1 (0,84 + 0,88) × 0,04) + (1 (0,88 + 0,98) × 0,12) + (0,98 × 0,58) + (1 (0,98 + 1) × 0,26)

= 0,03 + 0,11 + 0,56 + 0,25

= 0,95

Referring to [6], the AUROC level of truth, measured for the performance of the dragon fruit ripeness detection application, ranges between 0,9 – 1,0 (very good).

-

V. CONCLUSION

Based on the results and analysis conducted, several conclusions were attained:

The dragon fruit ripeness detection application is developed by firstly training the prepared dataset (training process), which produced labels and models that are used by the system to examine the test data (testing process) and label the output pictures.

From the model training conducted with 20 epoch, 50 epoch, 100 epoch, and 500 epoch and a learning rate value of 0.001, a model with a maximum validation accuracy of 0.9667 is attained, which is the training model with 50 epoch. This study utilize new testing data, with a total of 100 pictures which were tested using the model formed. The test results of the 50 epoch model shows 91% accuracy level in classifying the ripeness of the dragon fruit.

The test on the model in this study utilize the confusion matrix method, with a test condition in which the 100 test data must be new. To determine the false positive rate and true positive rate value of the epochs tested, the AUROC value attained from using the trapezoid method is 0,95 which is categorized as very good.

REFERENCES

-

[1] F. L. Bellec, F. Vaillant, and E. Imbert, “Pitahaya

(Hylocereus spp): a new fruit crop, a market with a future,” Fruit, vol. 61, no. 4, pp. 237–250, 2006.

-

[2] T. Istianingsih and D. Efendi, “Pengaruh Umur

Panen dan Suhu Simpan terhadap Umur Simpan Buah Naga Super Red (Hylocereus Costaricensis),” J. Hort. Indones., vol. 4, no. 1, pp. 54–61, 2013.

-

[3] Sustiono and W. S. Pambudi, “Rancang Bangun

Pemilahan Kualitas Buah Naga Menggunakan Teknik Image Processing dengan Metode Image Segmentation HSV,” J. Sains dan Inform., vol. 1, no. 2, pp. 28–37, 2015.

-

[4] D. C. Khrisne and I. M. A. Suyadnya, “Indonesian

Herbs and Spices Recognition using Smaller VGGNet-like Network,” in 2018 International Conference on Smart Green Technology in Electrical and Information Systems (ICSGTEIS), 2018, October, pp. 221–224.

|

[5] |

K. Simonyan and A. Zisserman, “Very deep Receiver Operating Characteristic (ROC) Curve,” convolutional networks for large-scale image 2016. [Online]. Available: recognition,” in arXiv preprint arXiv:1409.1556, http://www.statisticshowto.com/receiver-operating- 2014. characteristic-roc-curve/, . [Accessed: 09-Sep- |

|

[6] |

Stephanie, “Receiver Operating Characteristic 2018]. (ROC) Curve: Definition, Example. Retrieved from |

Discussion and feedback